── .✦ EXPERIMENT 5 ✦.──

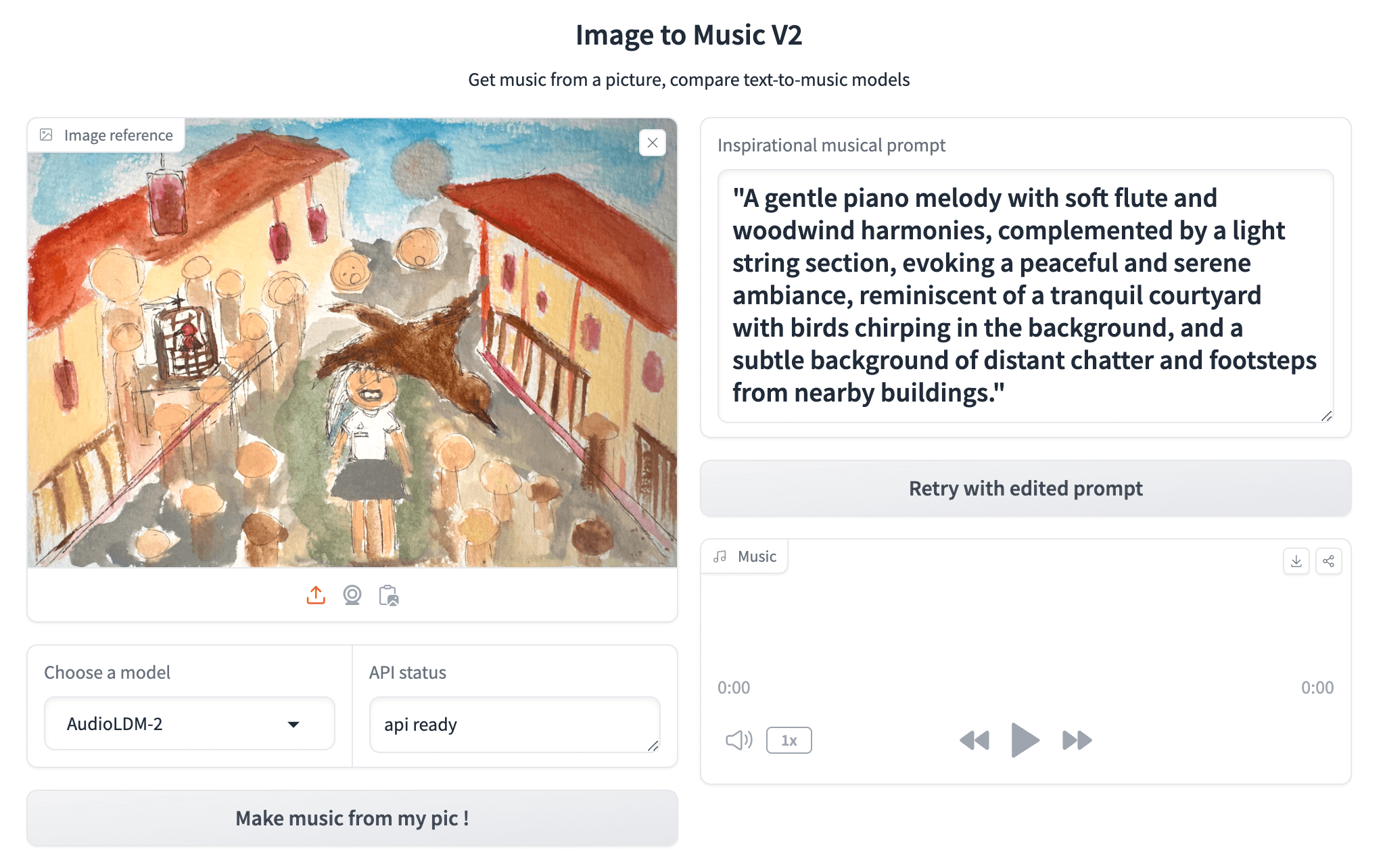

5 ꕀ Translating hand drawings to AI Generated Sound to form an Audio-Reactive Visual

OBJECTIVETo explore how drawn visuals can be translated into sound, using Generative AI models to experiment with prompts that alter the audio output. This process aims at understanding how visual and audio components interact in recalling memory and eliciting an emotional response. The project also aims to incorporate dynamic multisensory experiences by incorporating audio-reactive visuals created in TouchDesigner to design animated memory poster GIFs.

These explorations align with the overarching goal to understand how sound and visuals interact to influence memory perception, emotional engagement through an engaging way.

The Tasks:

- ✶ Translate hand drawings to sound

- ✶ Using Generative AI models

- ✶ Prompts to change sound

- ✶ Audio-reactive visuals Touchdesigner

- ✶ Animated memory poster GIF

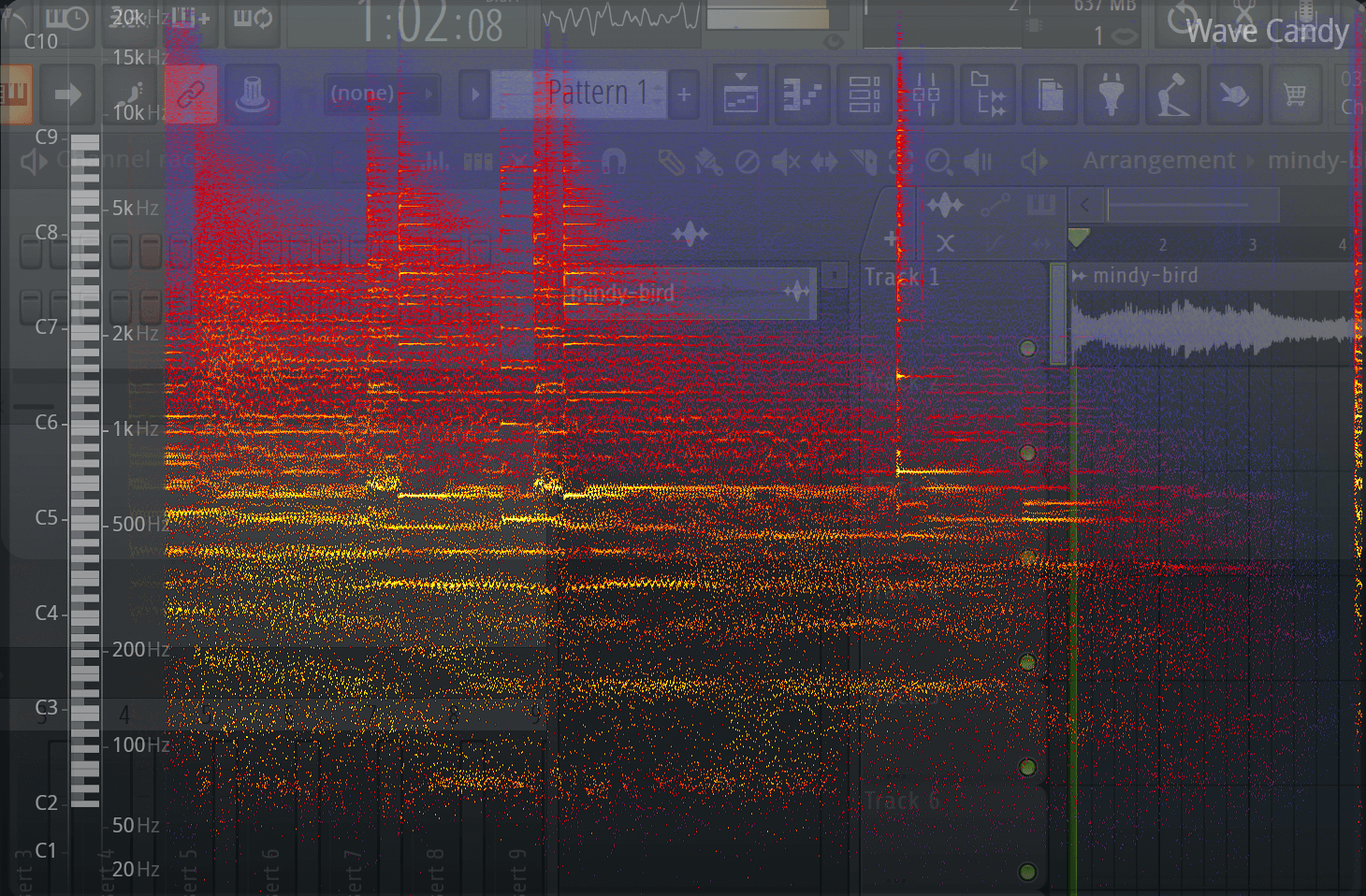

COMPARING SOUNDWAVE

Original hand drawing soundwave

AI Generated Audio imported into FL Studio to visualise

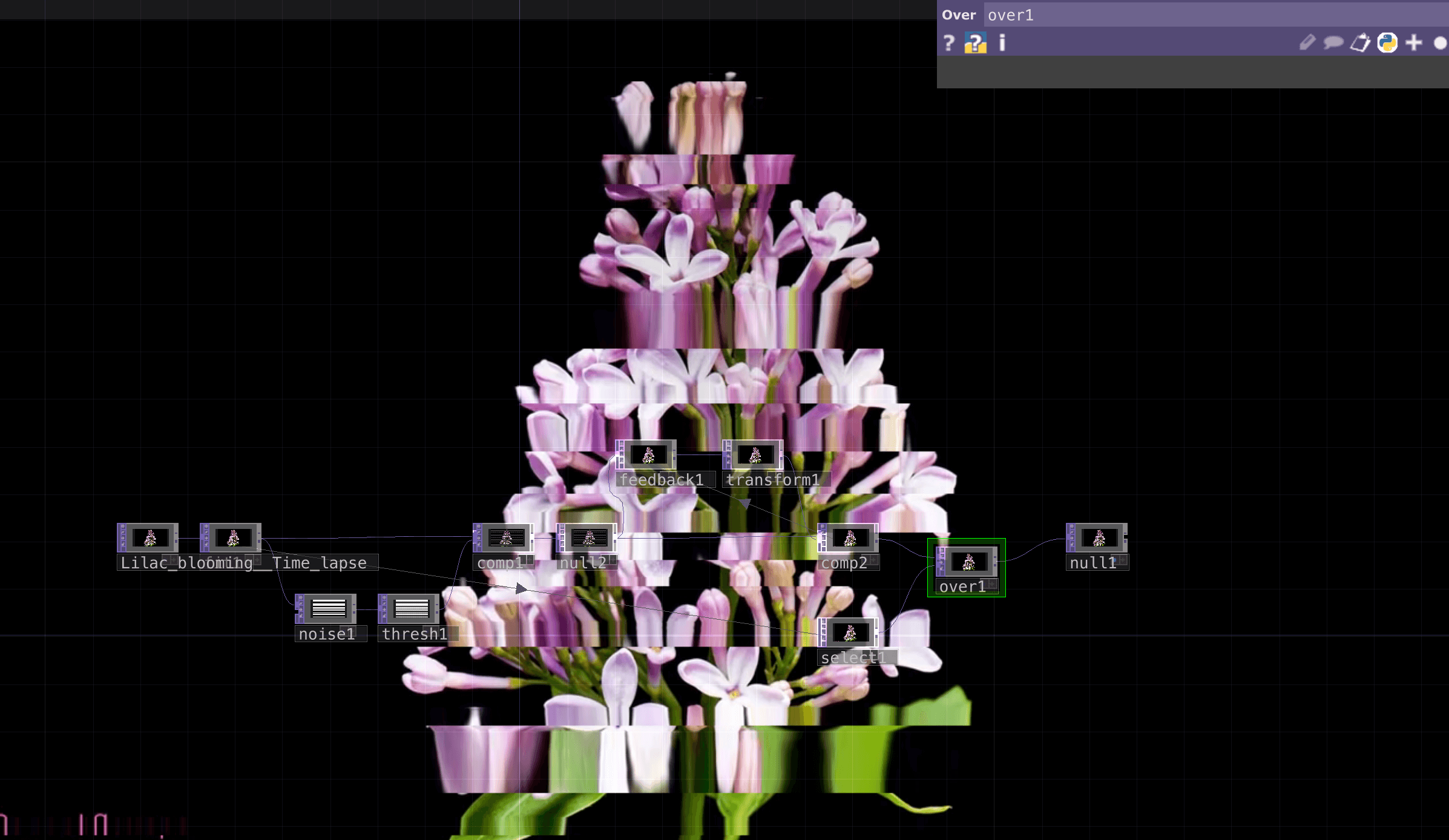

01 AUDIO-REACTIVE VISUALS USING TOUCHDESIGNER

Glitch feedback -

To mimic how a fragmented memory looks like

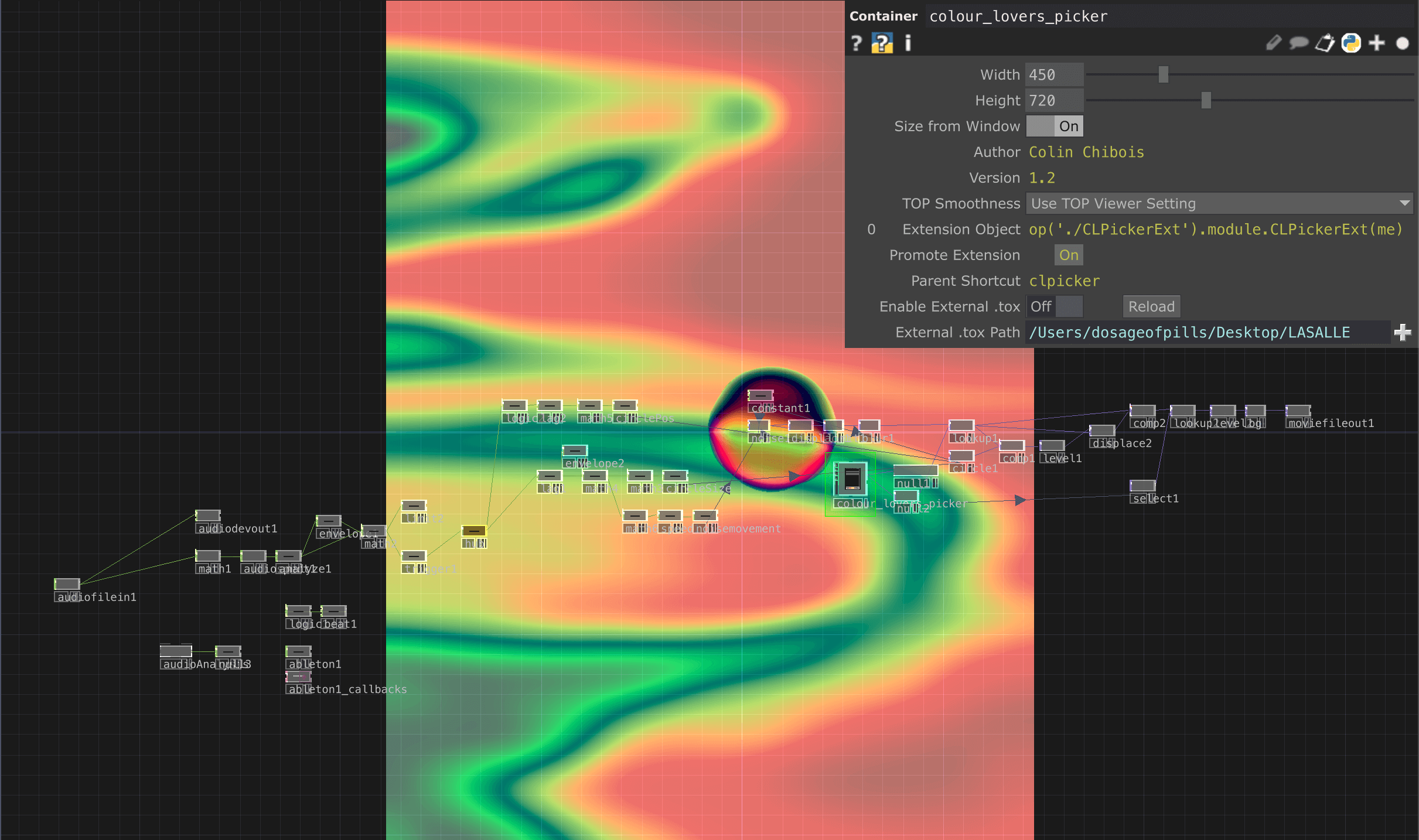

02 THE AUDIO-REACTIVE MEMORY BALL

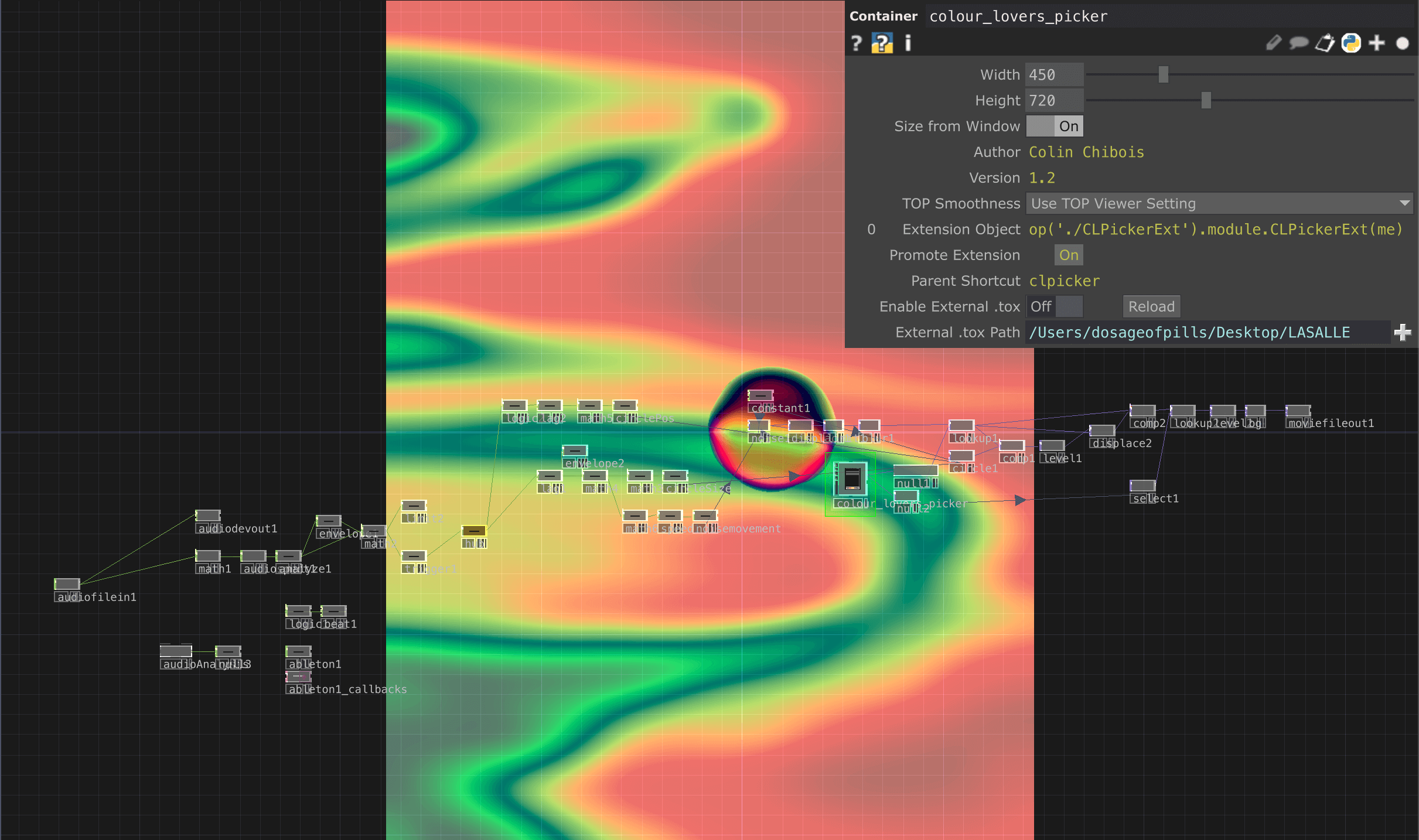

Touchdesigner Work Process - using colour picker tool to select the same colours from the hand drawing into the audio-reactive animation

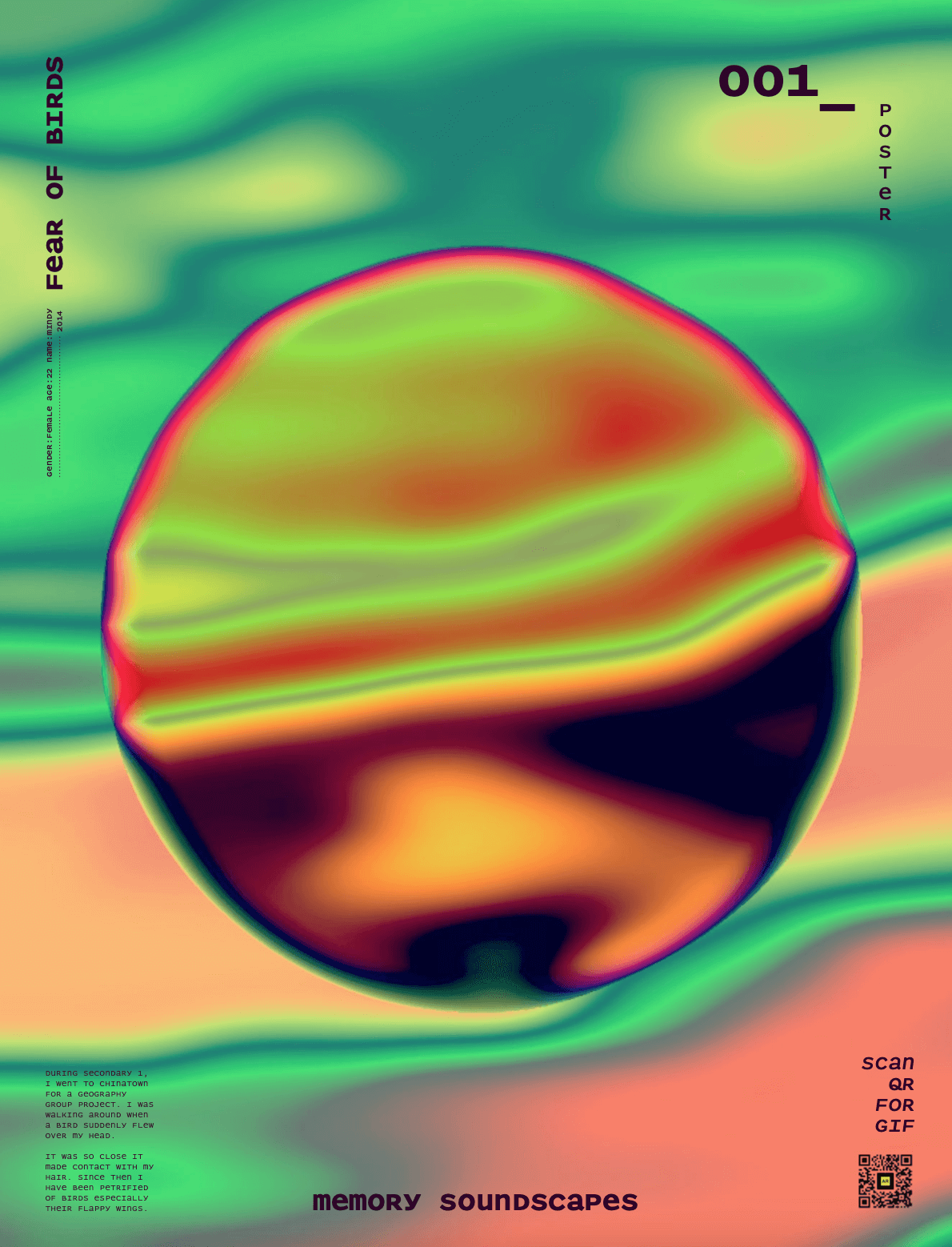

Memory Ball Audio-Reactive animation done in Touchdesigner

Memory Ball poster